October 22th, 2025

Introducing Julius Slack Agent (And how to build your own)

By Zach Perkel · 8 min read

Recently, we shipped Julius to Slack. A real agent that queries databases, generates visualizations, and handles multi-step analysis directly in your workspace.

Special thanks to Jaden Moore for leading the engineering on this project

The challenge wasn't getting an LLM to respond in Slack. That's trivial. The challenge was making it production-ready: handling concurrent requests, executing untrusted code safely, maintaining conversation context across threads, and most importantly, not hallucinating when querying your company's data.

This post walks through the architecture we built and the problems we encountered. If you're building agents that need to execute code, query databases, or maintain complex state, hopefully this saves you some pain.

The Naive Approach (And Why It Doesn't Work)

The challenge wasn't getting an LLM to respond in Slack. That's trivial. The challenge was making it production-ready: handling concurrent requests, executing untrusted code safely, maintaining conversation context across threads, and most importantly, not hallucinating when querying your company's data.

This post walks through the architecture we built and the problems we encountered. If you're building agents that need to execute code, query databases, or maintain complex state, hopefully this saves you some pain.

Problem 1: Context Explosion

Slack threads can have hundreds of messages. Do you send the entire thread to the LLM every time? That's expensive and slow. Do you send just the last few messages? Then the agent forgets what data source you were working with.

Problem 2: Hallucinated SQL

LLMs love to hallucinate table names and column names. Without access to your actual schema, they'll confidently write queries against tables that don't exist.

Problem 3: Code Execution

If your agent can write Python to analyze data (which is the whole point), you need a secure sandbox. Running arbitrary LLM-generated code on your servers is a spectacular way to have a very bad day.

Problem 4: Response Time

Slack users expect responses in seconds, not minutes. But complex analysis might require multiple API calls, database queries, and code executions. How do you maintain the illusion of responsiveness while doing real work?

So here's what we actually built:

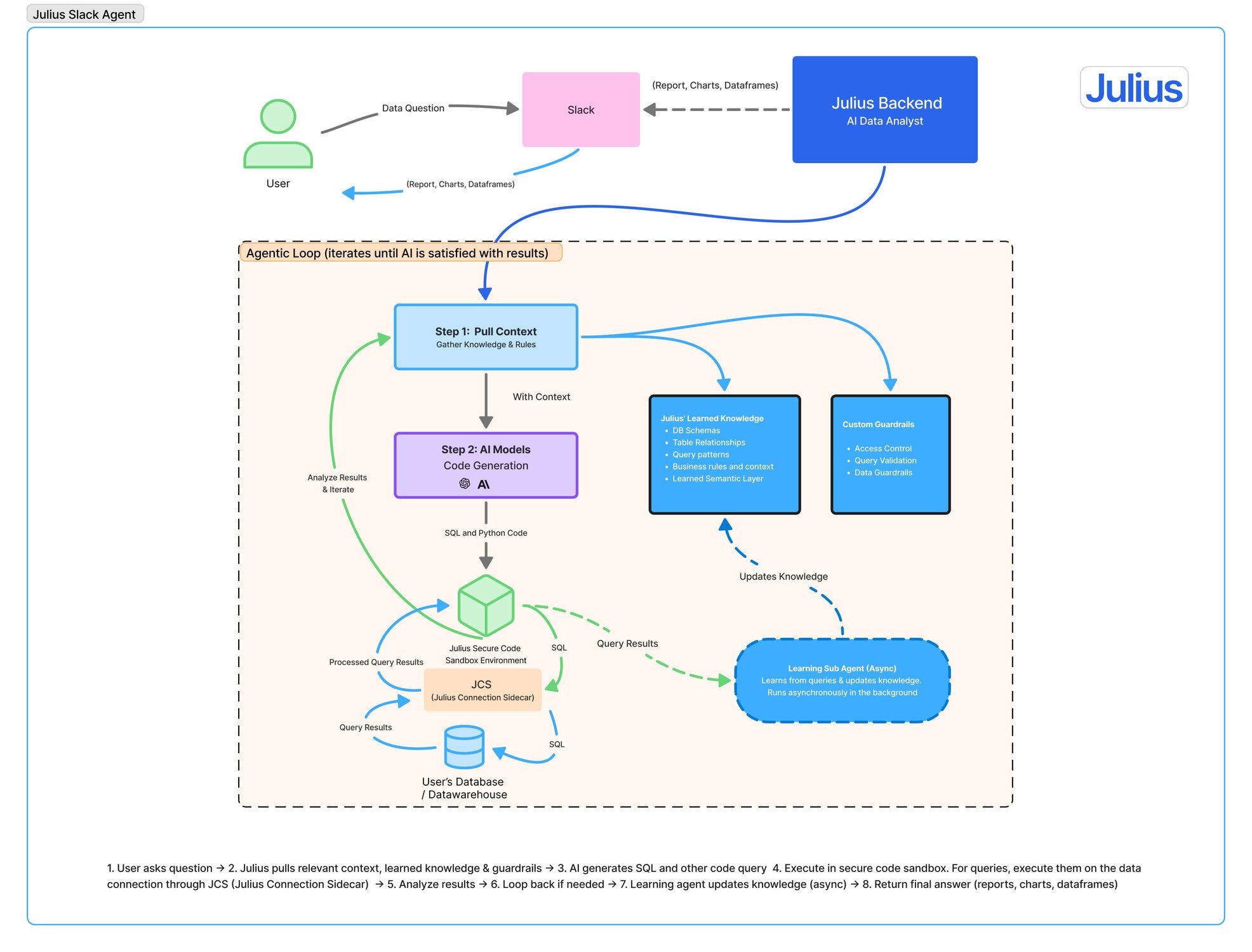

The key insight is that this isn't a chatbot, it's an agentic loop that iterates until the AI is satisfied with its results. Let me break down each component.

Context Gathering

Before the AI even thinks about writing code, we pull everything it needs to know. This includes learned knowledge from previous queries run in this workspace, table relationships discovered through usage, common query patterns, and failed queries along with why they failed.

We also gather the database schema—actual table names and column names, relationships between tables, column types and sample data, plus query patterns that worked before. On top of this, we apply custom guardrails including access control rules, query validation rules, and data sanitization requirements.

This context gathering happens in parallel with the initial LLM call, using a purpose-built "Learned Semantic Layer" that we update asynchronously. More on this later.

Code Generation

With context in hand, the AI generates SQL and Python code. The model receives the user's question, relevant schema information from Step 1, previous conversation context, and any custom rules or guardrails.

The AI then produces the actual queries and analysis code needed to answer the question. This happens through the standard LLM inference, we're using models capable of structured outputs to ensure the response is parseable and executable.

The key is that the AI isn't just generating code blindly. It has access to your actual schema, knows what tables and columns exist, and has learned from previous successful queries in your workspace.

Secure Execution (enter the Julius Connection Sidecar)

Every piece of generated code runs in an isolated sandbox—what we call the Julius Connection Sidecar (JCS). This is a containerized environment that has no network access except to the user's database via a strictly controlled connection. It runs with minimal privileges, has CPU and memory limits, times out after 30 seconds, and logs everything for auditing.

SQL queries go directly to the user's database through JCS. The AI never sees credentials; we handle that through a connection pool managed by our backend.

Analysis and Iteration

Here's where the "agentic" part comes in. After executing code, we don't just return results—we feed them back to the AI:

Iteration 1: Query returned 0 rows, the AI realizes it used the wrong table name, it regenerates query with correct table

Iteration 2: Query succeeded but numbers look off, the AI notices timezone issue in date filtering, it fixes query and re-runs

Iteration 3: Results look good, the AI generates visualization code, it creates chart and returns to user

We limit the number of iterations to prevent infinite loops. In practice, most requests resolve in just a few iterations.

Async Learning

This is the secret sauce. While the user sees their results, a background learning agent updates the semantic layer with discovered table relationships, records which queries succeeded and failed, identifies common patterns in this workspace, and updates guardrails based on errors.

Next time someone asks a similar question, the context gathering step has better information, and the AI generates correct code on the first try.